Your Engineering-Grade Testing Platform for AI Prompts

Stop shipping broken prompts. Test systematically across models, catch regressions before production, and collaborate with your team in a shared workspace.

Compatible with leading AI providers

Our Growing Impact

Real metrics from our community of prompt engineers building with confidence

0+

Total Prompts Tested

Engineers are actively validating their AI prompts to ensure quality

0+

Test Runs Completed

Comprehensive testing across models, catching issues before they reach users

0X

Faster Iteration Cycles

Teams ship prompt improvements 5x faster with systematic testing in place

The Hidden Cost of Untested Prompts

Problems

What teams struggle with today

Prompts break in production

Your carefully crafted prompts work perfectly in development, then fail silently when models update or context changes.

No visibility into changes

Someone tweaks a prompt to fix one issue and breaks three others. You find out when users complain.

Manual testing wastes hours

Copy-pasting prompts between playground interfaces. Spreadsheets full of test cases. Time you could spend building.

The Real Cost

Impact on your team and business

Lost trust in prompt reliability

Without systematic validation, you can't confidently rely on your prompts. Every change is a leap of faith.

Wasted time investigating failures

When prompts behave unexpectedly, teams spend hours tracking down what changed and why it matters.

Engineering talent on manual tasks

Your skilled developers are stuck copy-pasting between interfaces instead of building innovative features.

Our Solutions

How we solve these challenges

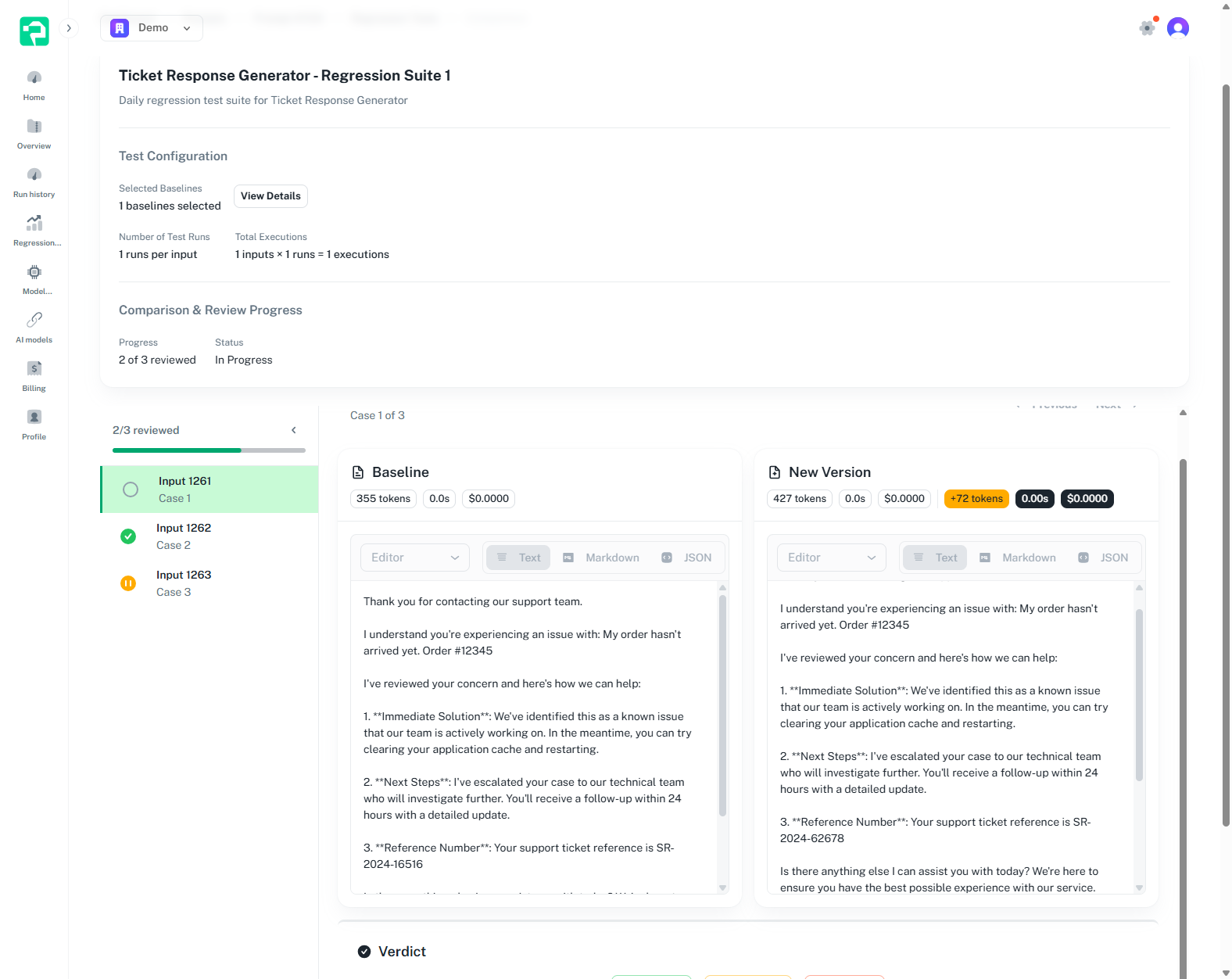

Build confidence through testing

Compare outputs against baselines to understand exactly how your prompts behave across changes and models.

Parallel development workflows

Track multiple prompt versions simultaneously. Experiment with alternatives and compare results side-by-side.

Systematic test automation

Define once, test everywhere. Parameterized tests with comprehensive input variations and automated test execution.

Explore Our Core Features

Click on any feature to learn more, or watch as we showcase each capability

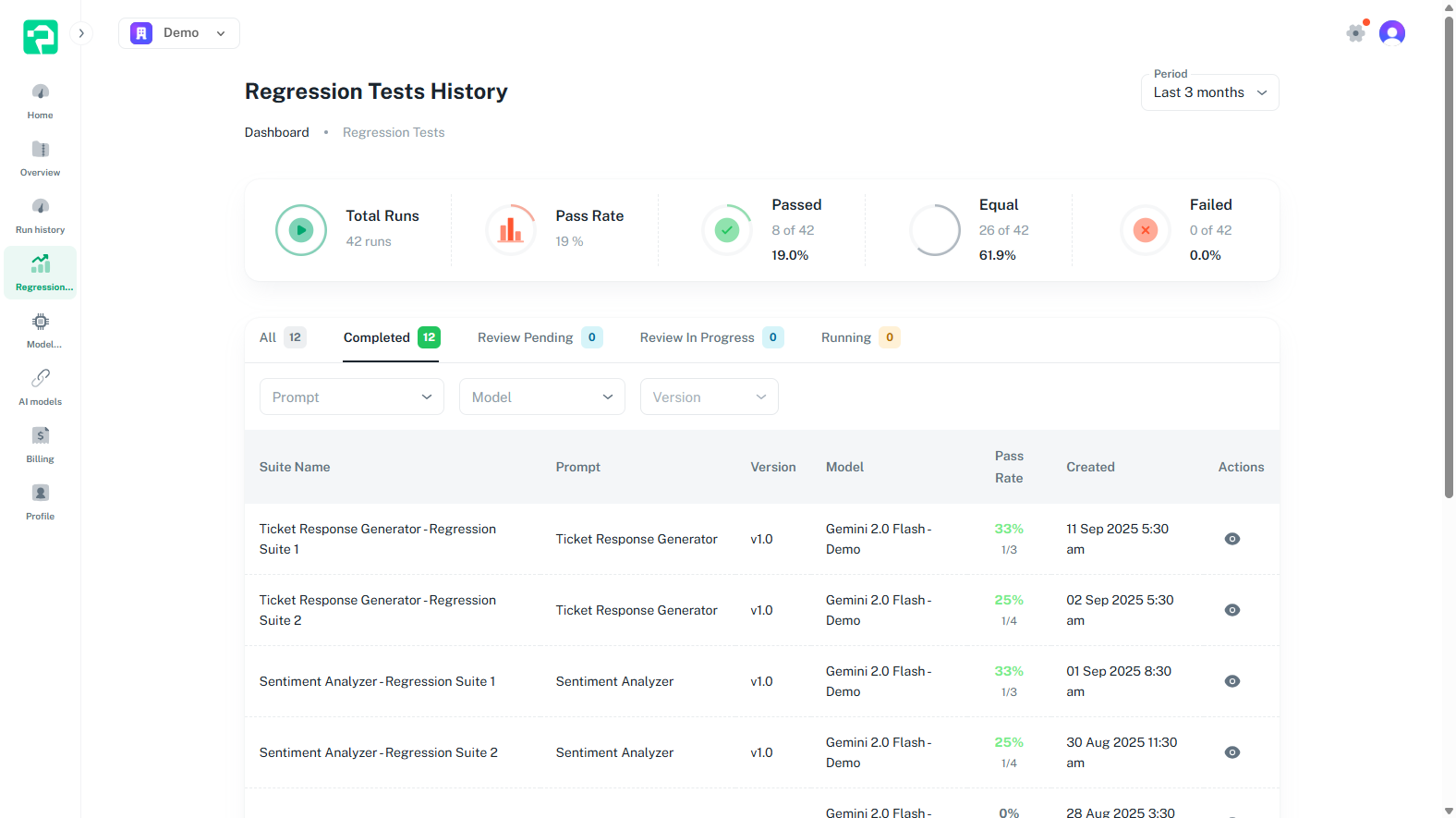

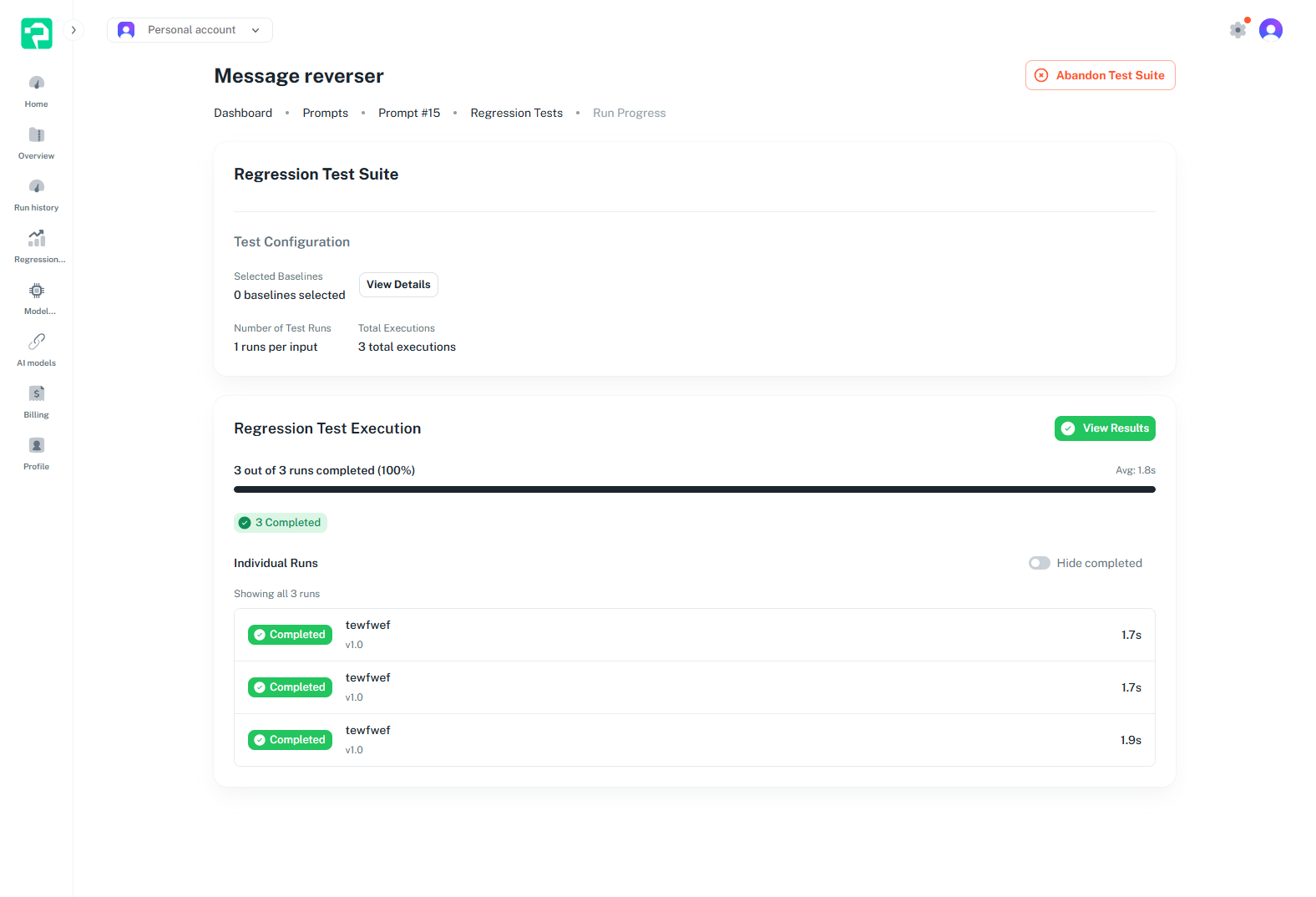

Regression Testing

Never break production prompts again

Protect your AI features from unexpected failures with automated regression testing. Set baselines from working prompts, run continuous tests against modifications, and get instant alerts when changes impact behavior. Compare outputs systematically to track changes and ensure consistency.

Multi-Model Validation

Test once, validate everywhere

Run the same tests across multiple AI models simultaneously. Compare quality, performance, and costs across OpenAI, Anthropic, Google, and Grok. Make data-driven decisions about which model best fits your use case and budget.

Version Control

Track and manage prompt iterations

Professional version management designed specifically for prompt engineering. Track every change with sequential and alternative version paths, experiment with variations, and never lose a working prompt. Complete audit trail for tracking your iterative improvements.

Performance Analytics

Data-driven prompt optimization

Deep insights into prompt performance with detailed metrics and visualization tools. Track success rates, response quality, consistency scores, and costs. Monitor trends and patterns to improve your prompts continuously.

How It Works

Four simple steps to transform your prompt engineering workflow

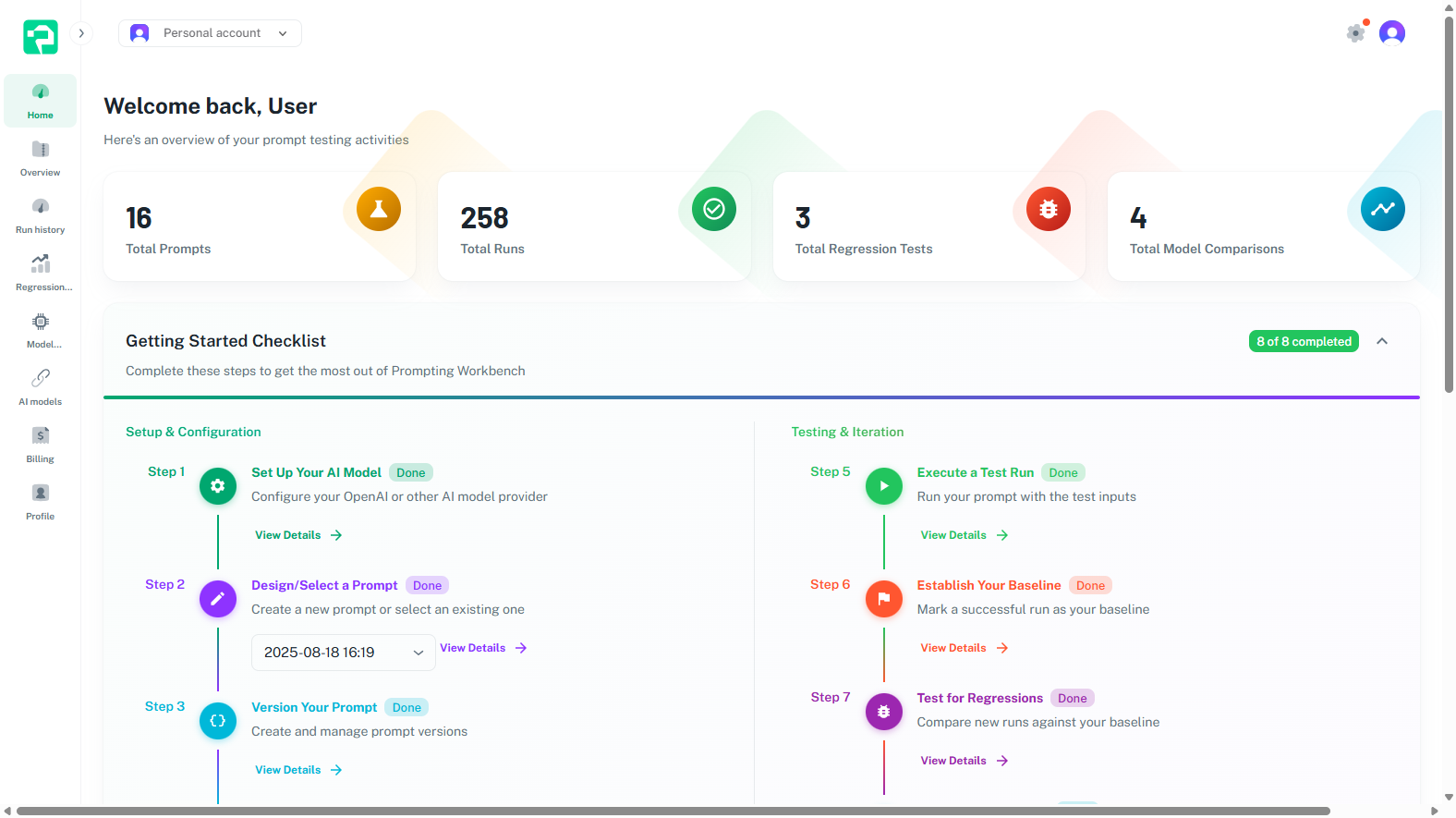

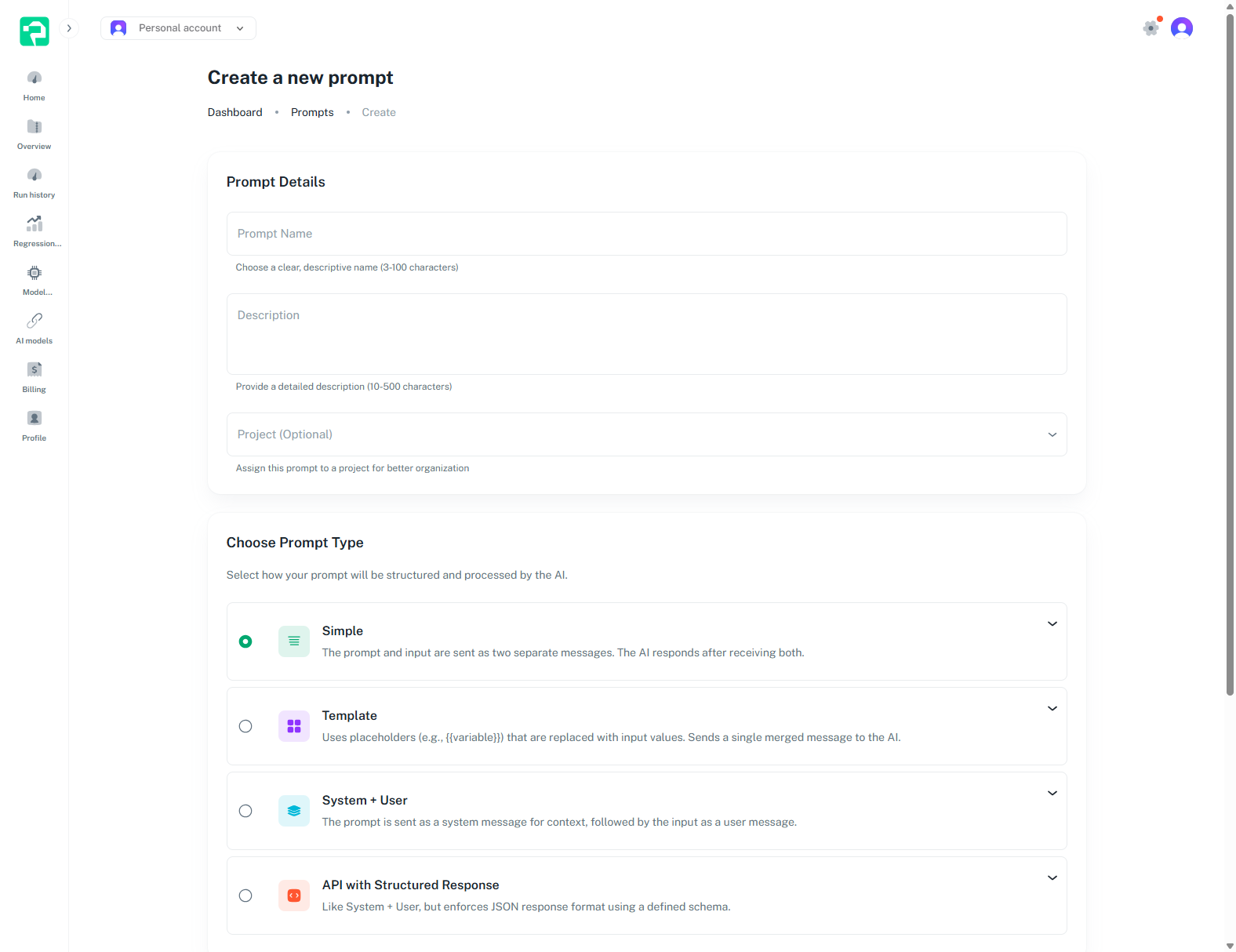

Create & Version Your Prompts

Start by creating your prompts and organizing them into projects. Each modification creates a new version, maintaining a complete history.

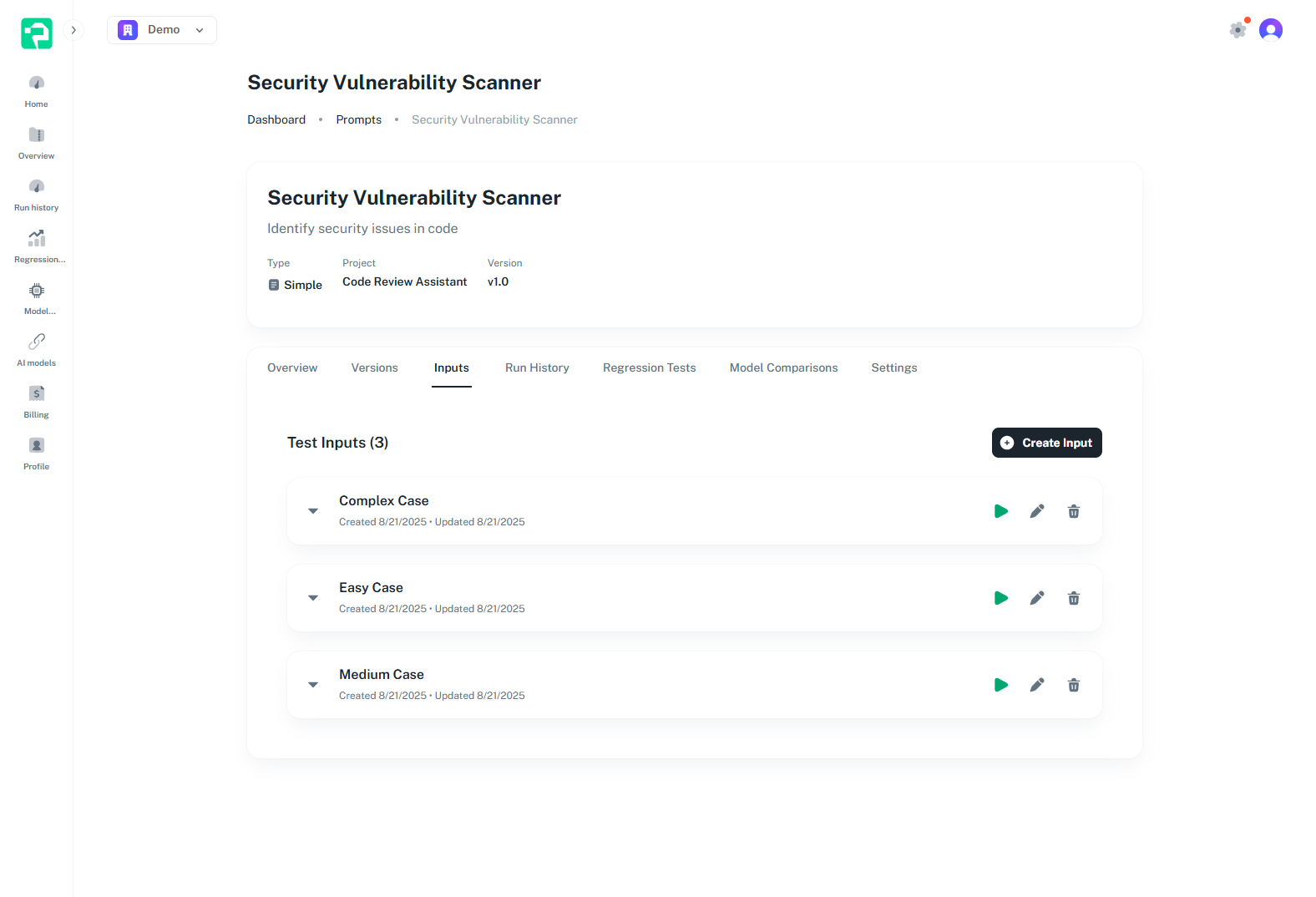

Define Test Inputs

Set up comprehensive test cases with various inputs that represent real-world scenarios your prompts will encounter.

Run Automated Tests

Execute your test suite across different prompt versions and AI models. Get consistent, reproducible results every time.

Analyze & Iterate

Review detailed comparisons, identify improvements, and refine your prompts based on data-driven insights.

Built for Every Team

Whether you're testing, optimizing, or managing AI prompts, Prompting Workbench adapts to your workflow

QA Engineers

Ensure prompt consistency across updates and catch regressions before they reach production.

KEY BENEFITS

Automated regression testing

Version comparison tools

Test suite management

Performance tracking

Finally, a way to apply software testing principles to AI prompts!

Prompt Engineers

Systematically optimize prompts with data-driven insights and comprehensive testing.

KEY BENEFITS

A/B testing capabilities

Model comparison

Performance analytics

Version control

Transform prompt engineering from art to science with measurable results.

Product Managers

Track AI performance metrics and ensure product quality meets business requirements.

KEY BENEFITS

Executive dashboards

Quality metrics

Cost analysis

Compliance tracking

Get visibility into AI performance and make informed product decisions.

Measurable Impact

Join teams who have transformed their prompt engineering workflow with data-driven testing

90%

Edge Cases Caught

Before reaching production

5x

Faster Testing Cycles

Automated vs manual testing

30+

Hours Saved Monthly

Per prompt engineer

85%

Fewer Regressions

Through systematic testing

Trusted by Engineering Teams Worldwide

From startups to enterprises, teams rely on Prompting Workbench to ensure their AI prompts deliver consistent, reliable results in production.

Ready to Transform Your Prompt Engineering?

Start testing your prompts with confidence. No credit card required.

Free tier available

No credit card required

Setup in 5 minutes